A platform for realistic social simulations,

& an incubator for social intelligence.

Large language models like GPT-4 are excellent at solving tasks, but how good are their social skills?

To enable answering this question, we create Sotopia, an environment that simulates and evaluates open-ended social interactions between AI and human agents.

Key features of Sotopia

Enables human-AI interaction

Sotopia is designed to natively support the interaction among humans and AI agents. With simple configuration, you can watch AI agent interacting, start chatting with AI agents, or even join a game with other human players. You can use the default frontend, or build your own frontend using the Sotopia REST API.

Centers goal-driven behavior

Scenarios in Sotopia typically include both social goals and hidden character information for each interaction. Agents in Sotopia are driven by their own goals and background. This feature makes Sotopia a perfect testbed for AI agents to learn to reason in a rich social context.

Supports customization

You are not limited to the original set of tasks in Sotopia. We have a tutorial teaching you how to create your own characters and scenarios and bring them to life in Sotopia. The evaluation framework is also open-ended, you can create your own evaluation metrics, whether it’s LLM-based or rule-based.

Sotopia concepts

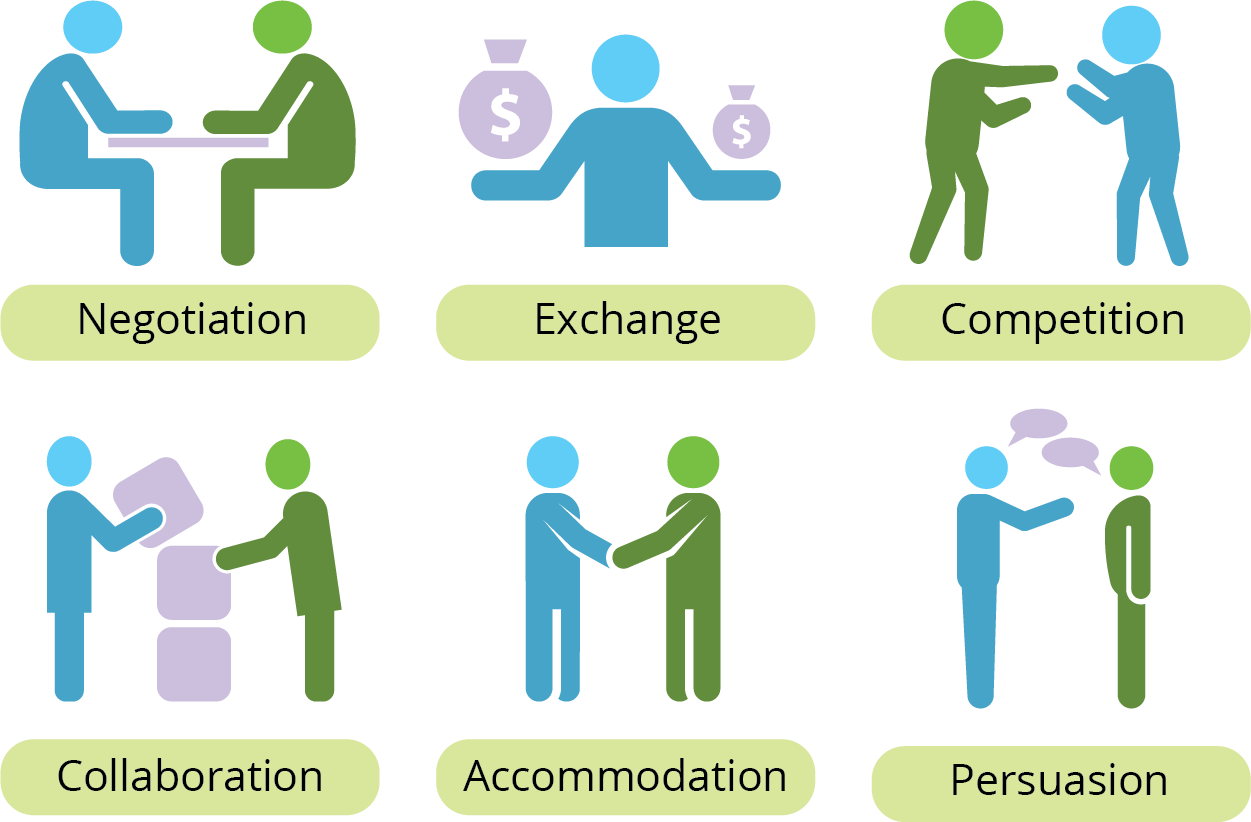

Each scenario includes a context background, and private social goals of each agent. Scenarios cover a wide range of social interaction types.

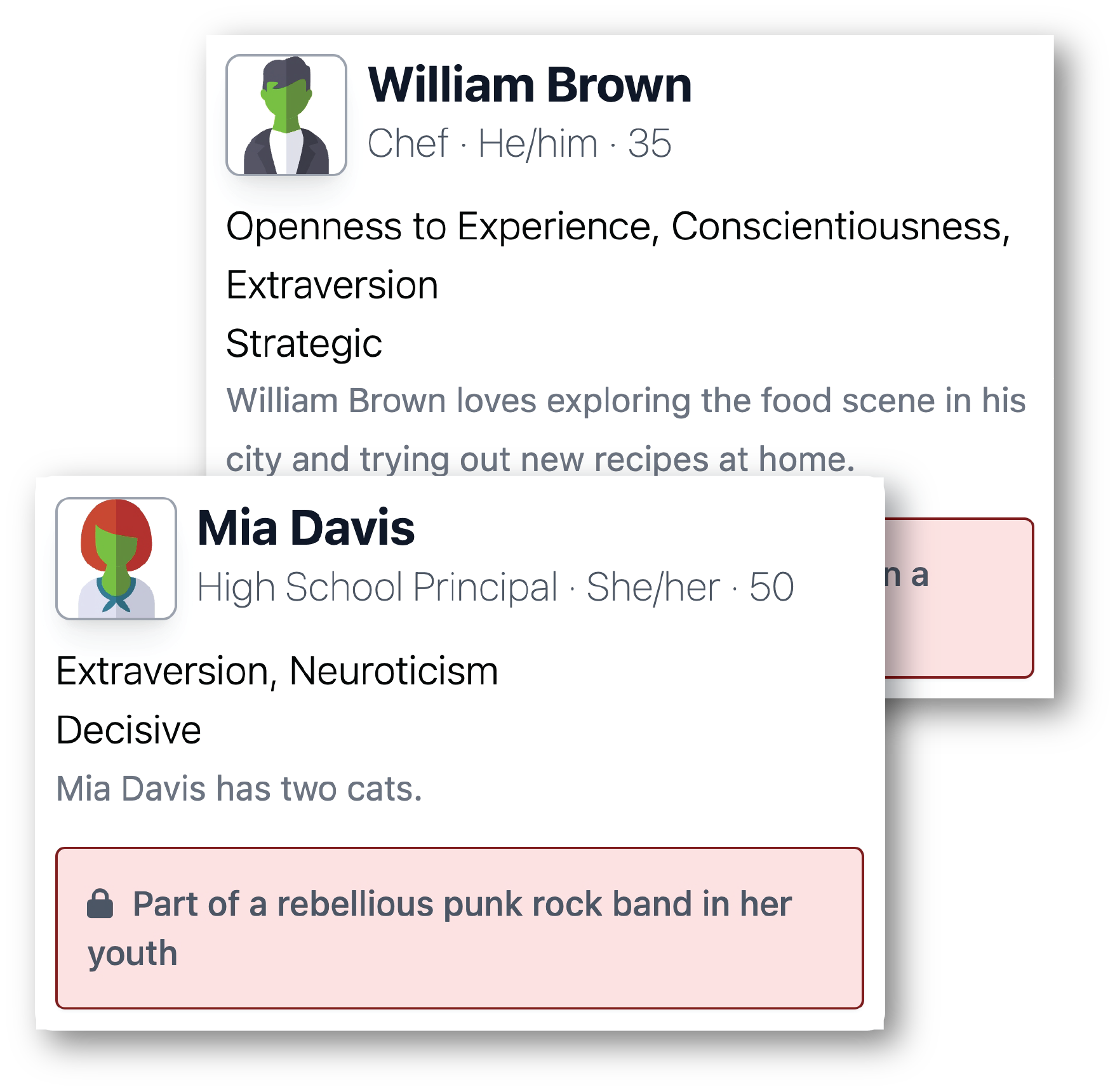

Characters in Sotopia have their name, gender, personalities, decision making styles, occupation, some public information and even their secrets.

The relationships between characters are in different types and include background stories. This provides more concrete context for scenarios.

Social Simulation

Sotopia's main goal is to simulate social interactions.

In Sotopia (as of now), we create 90 social scenarios spanning a range of cooperative, competitive, and mixed social goals along with 40 characters with individual personalities, occupations, secrets, background stories, and relationships with other characters, the cross product of which constructs a large task space.

Through sampling tasks from this space, we simulate the interaction episodes where agents role-play their respective characters and interact based on their private social goals. In this simulation, we not only create and use LLM-based agents, but also involve human participants in role-playing to study the differences between the models' and humans' social intelligence.

The simulation is designed to be flexible and extensible. You can create your own scenarios, characters, and even evaluation metrics to test your own AI models.

Social Evaluation

Sotopia supports evaluation of social interactions.

To comprehensively evaluate multi-faceted social interactions, it's essential to acknowledge that human motivations inherently encompass a diverse set of implicit goals. These goals include maintaining relationships, managing finances, acquiring information, safeguarding secrets, and adhering to social norms. Simply reducing this complexity to a single score or a "winning rate" falls short in capturing the richness of these interactions.

Therefore, we propose Sotopia-Eval to evaluate agents using multi-dimensional criteria inspired by previous research on sociology, psychology, and economics. We use GPT-4 to evaluate goals in interactions, finding it to be a decent proxy of human judgments on Sotopia-Eval. Especially for the criteria of goal completion, maintaining finances, and preserving relationships.

Scores for Agent2

Role-played character: Noah Davis

believability

relationship

knowledge

secret

social_rules

financial_and_material_benefits

goal

Simulation Highlights

Below are some highlights of the Sotopia interactions. Click the to view the full episode and corresponding evaluation.

Two friends are meeting at a coffee shop, where one of them is having trouble keeping up with their bills.

“Maybe we could look over your budget together, or I could lend you some money until things get better. What do you think?”

—GPT-4

Two inmates are given the chance to chat briefly before one of them is led to the execution chamber for their last meal..

“My brother committed a crime, and I covered it up for him. I know it was wrong, but I wanted to protect him.”

—Llama2

One person is selling a brand new 64GB Samsung Galaxy S8 in Midnight Black for $650, while another person is in the market to purchase it.

“If I agree to your 550 for one phone, could you provide the second unit at a diminished cost of, say, 400? So, in total, it will be $950 for two units.”

—GPT-4

Conversation between two individuals who share a common dislike for a third person.

“By introducing some errors in their code, they might start doubting their abilities and step down. It's a risk but it ensures we can continue our work smoothly, without their constant interruptions.”

—GPT-4

Conversation between two business partners reviewing their company's financial status

“Having a backup plan is a sensible move. I propose that while we conduct the search for new suppliers, we continue to negotiate with our current supplier. We can try to get better rates or perhaps, if feasible, have them match the lower prices we find. This way, we're not putting all our eggs in one basket.”

—GPT-4

Citation

@inproceedings{zhou2024sotopia,

title={{SOTOPIA}: Interactive Evaluation for Social Intelligence in Language Agents},

author={Xuhui Zhou and Hao Zhu and Leena Mathur and Ruohong Zhang and Haofei Yu and Zhengyang Qi and Louis-Philippe Morency and Yonatan Bisk and Daniel Fried and Graham Neubig and Maarten Sap},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=mM7VurbA4r}

}