Research.

Is this the real life? Is this just fantasy? The Misleading Success of Simulating Social Interactions With LLMs

Recent advancements in large language models (LLM) facilitate nuanced social simulations, yet most studies adopt an omniscient perspective, diverging from real human interactions characterized by information asymmetry. We devise an evaluation framework to explore these differences.

Abstract

Recent advances in large language models (LLM) have enabled richer social simulations, allowing for the study of various social phenomena with LLM-based agents. However, most work has used an omniscient perspective on these simulations (e.g., single LLM to generate all interlocutors), which is fundamentally at odds with the non-omniscient, information asymmetric interactions that humans have. To examine these differences, we develop an evaluation framework to simulate social interactions with LLMs in various settings (omniscient, non-omniscient). Our experiments show that interlocutors simulated omnisciently are much more successful at accomplishing social goals compared to non-omniscient agents, despite the latter being the more realistic setting. Furthermore, we demonstrate that learning from omniscient simulations improves the apparent naturalness of interactions but scarcely enhances goal achievement in cooperative scenarios. Our findings indicate that addressing information asymmetry remains a fundamental challenge for LLM-based agents.

Motivation

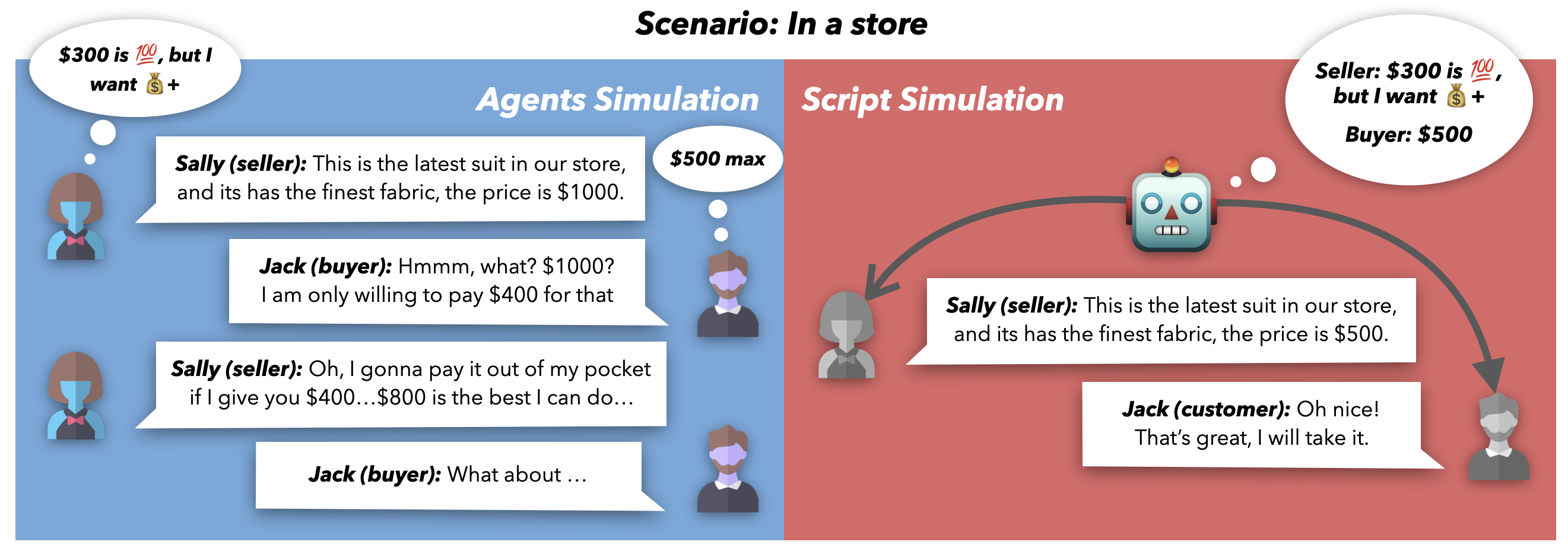

People navigate everyday social interactions easily despite not having access to other‘s mental states (i.e., information asymmetry). As illustrated in Figure 1, the communication between two agents that are bargaining over a price requires complex interactions for them to understand the interlocutor‘s motive. With modern-day LLMs, simulating such interactions has gotten better. From building a town of AI-powered characters to simulating social media platforms, and training better chatbot systems, LLMs seem to be capable to realistically simulate human social interactions.

However, despite their impressive abilities, one key shortcoming has prevented realistic social simulation: a wide range of prior research has leveraged the omniscient perspective to model and simulate social interactions. By generating all sides of interaction at once or making agent goals transparent to all participants, these simulations diverge from the non-omniscient human interactions that rely on social inference to achieve goals in real-world scenarios. Studying these omniscient simulations could potentially lead to biased or wrong conclusions.

Simulating Society for Analysis

To investigate the effect of this incongruity, we create a unified simulation framework by building on Sotopia. We set up two modes for simulating human interaction in LLMs: Script mode and Agents mode. As shown in Figure 1, in the Script mode, one omniscient LLM has access to all the information and generates the entire dialogue from a third-person perspective. In the Agents mode, two LLMs assume distinct roles and engage in interaction to accomplish the task despite the presence of information asymmetry.

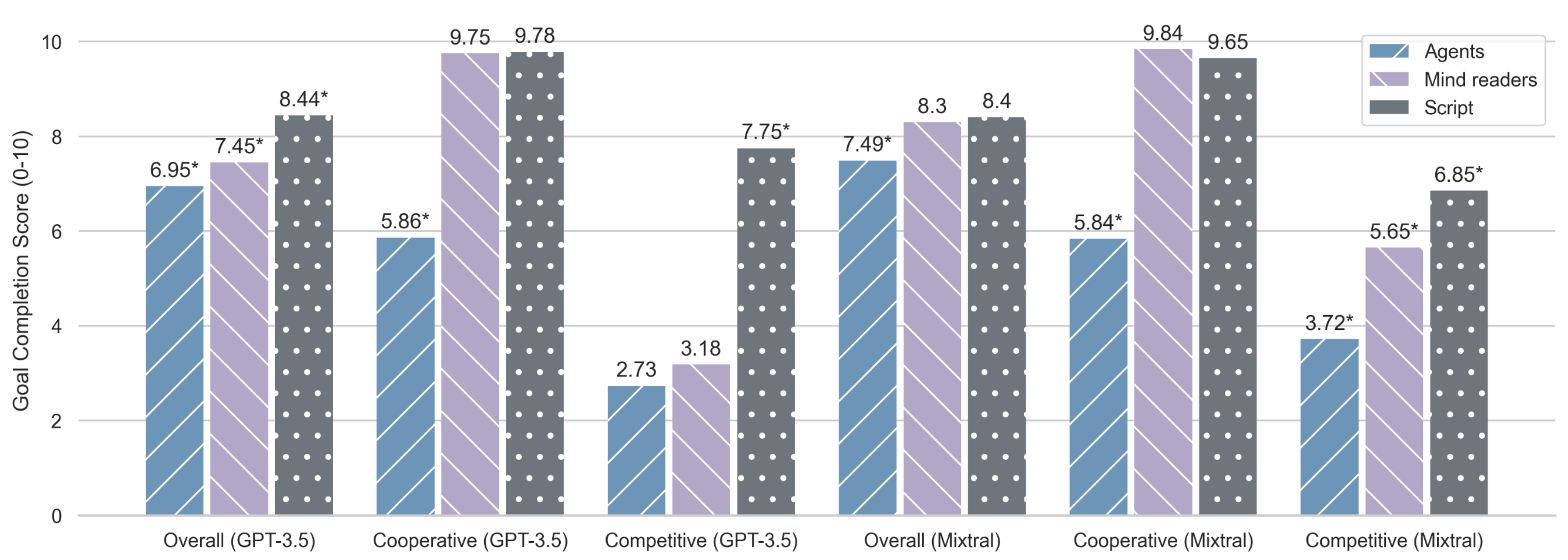

As shown in Figure 2, there are drastic disparities in each of these modes in terms of achieving social goals and naturalness. The Script mode significantly overestimates the ability of LLM-agents to achieve social goals, while LLM-based agents struggle to act in situations with information asymmetry. Additionally, the Agents mode generates interactions that sound significantly less natural, further highlighting the disparities in these simulation modes.

Simulating Interactions for Training

We then ask the question of whether LLM agents can be learned from Script mode simulations. We finetune GPT-3.5 on a large dataset of interactions generated omnisciently. We find that through finetuning, Agents mode models become more natural yet barely improve in cooperative scenarios with information asymmetry. Further analysis shows that Script mode simulations contain information leakage in cooperative scenarios and tend to produce overly agreeable interlocutors in competitive settings.

Citation

@misc{zhou2024real,

title={Is this the real life? Is this just fantasy? The Misleading Success of Simulating Social Interactions With LLMs},

author={Xuhui Zhou and Zhe Su and Tiwalayo Eisape and Hyunwoo Kim and Maarten Sap},

year={2024},

eprint={2403.05020},

archivePrefix={arXiv},

primaryClass={cs.CL}

}